Hey, I have moved! http://fernandodibartolo.wordpress.com/

Thanks!!

YAPB, yet another programming blog!

Tuesday, October 21, 2014

Sunday, July 20, 2014

World Cup thoughts & numbers for ComunidadProde

If you read my last post from June 8th, I was kind of impressed about the numbers in general for ComunidadProde because of the FIFA World Cup, such as new people signing up, visits, and so on. Well, tournament is now over, and in terms of numbers, this is what it meant for the site (still can't believe how close we -Argentina- have been to get the trophy :( !!)

It was great to see many companies trusting us to create internal tournaments. There were big names, that I don't feel like revealing since I didn't ask for permission :P.

In terms of online public media, there were a few more articles published:

The iOS app was ranked pretty high, alternating the first positions in the country

Posted on June 8th, number of people signed up was around 5,000. Today I can say it is close to 15,000!

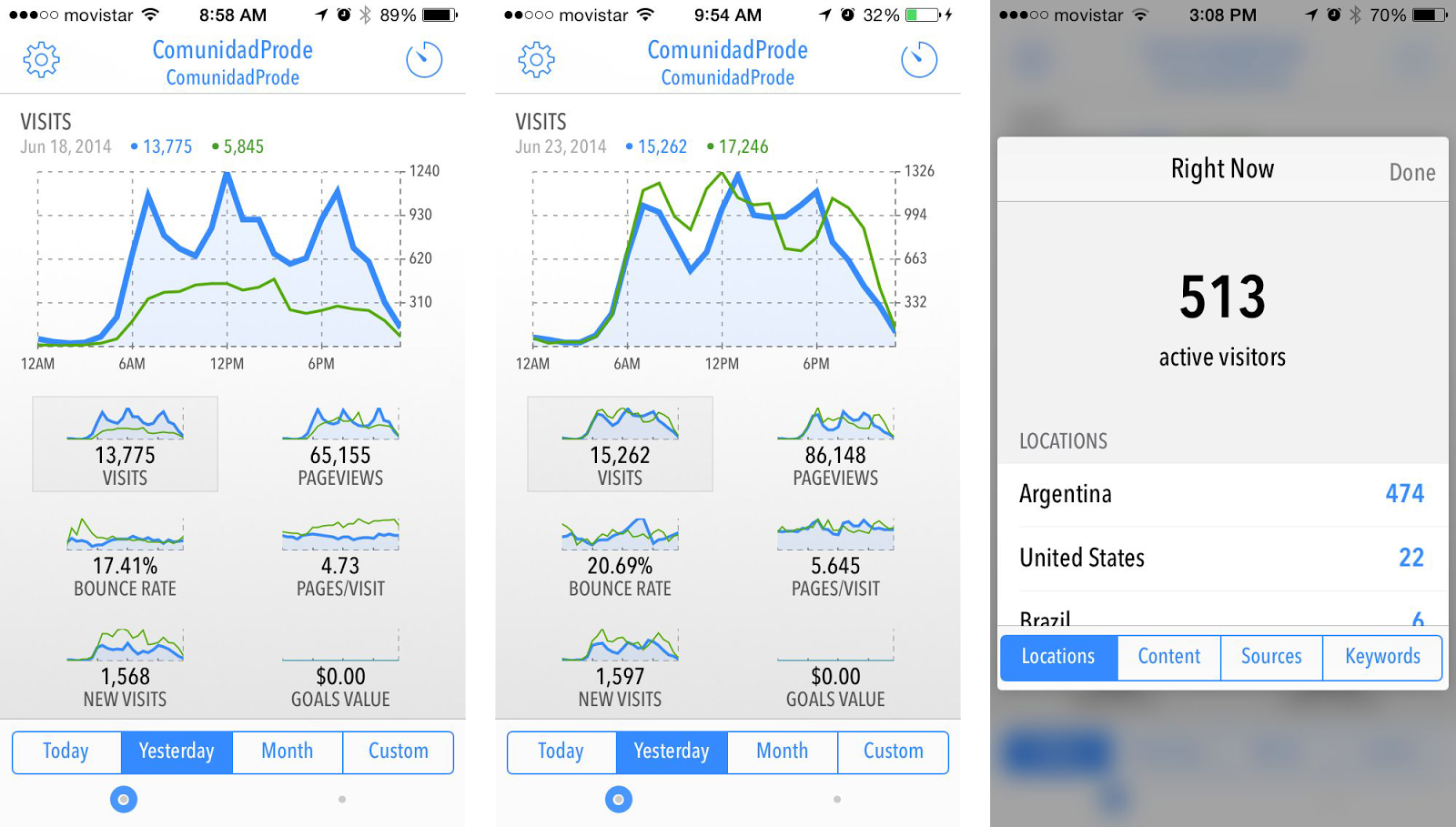

Posted on June 8th, number of visits per day was around 1,100. Today... hmm, peaks of 17,000!! I let the screenshots below to speak by themselves :)

What's next, will see, I have been thinking on UI redesign, taking advantage of great new frameworks out there...

It was great to see many companies trusting us to create internal tournaments. There were big names, that I don't feel like revealing since I didn't ask for permission :P.

In terms of online public media, there were a few more articles published:

- Cronista Newspaper

- Tiempo Argentino Newspaper (also in paper)

- LaNación Newspaper

The iOS app was ranked pretty high, alternating the first positions in the country

|

| Images from AppViz |

Posted on June 8th, number of people signed up was around 5,000. Today I can say it is close to 15,000!

Posted on June 8th, number of visits per day was around 1,100. Today... hmm, peaks of 17,000!! I let the screenshots below to speak by themselves :)

|

| Images from Quicklytics, awesome app from my bud Eduardo Scoz |

What's next, will see, I have been thinking on UI redesign, taking advantage of great new frameworks out there...

Sunday, June 8, 2014

Old friends: Iterative Development & Comunidad Prode

The FIFA World Cup has brought a lot of attention to the internet: social, games, portals and so on... (pause) duh, we are in 2014, aren't we?

Alright, Comunidad Prode was not an exception. During the last couple of weeks, I saw a lot (I mean a lot) of new people signing up, creating user groups to have their own private tournaments.

In numbers, we had 2000 users in the system, but only 600 were active (by active I mean actually playing predictions for the last AFA season). During the last weeks, number of signups raised to 5000 users, and around 2800 active.

Also, while we used to have between 300 and 400 visits per day, we are now going over 1000:

Something that also caught my attention while looking at these analytic info was the referral pages. People was coming the to site, as expected, from Facebook, Google searchs, etc, but surprisingly, a lot was coming from an online newspaper. The reason was because of this article that I share with you.

At the same time, Fernando Carolei, a journalist who covers technology and new tendencies in public television channels in Argentina, was promoting the site as well in some of his "live" sections. After that, we had exchanged a few twitter direct messages, and he was telling me that he was using Comunidad Prode for a while, and found it actually pretty good :).

Anyway, as most of my blog posts, tech/programming stuff are present, and here is what I have today before wrapping up.

As iterative software development promotes, you might have heard to not overdesing; for a given problem and context, solve it in the "smartly-cheapest" way. In eXtremme Programming, this means DTSTTCPW: do the simplest thing that could possibly work.

That said, in the first version of Comunidad Prode, the way to handle user rankings was to calculate, on the fly, the points for a given user coming into the site. When the number of people started to grow, I started to see some heavy processing time. Long story short, rather than calculate points "dynamicaly", I moved it as part of the publishing results process (this is when the results of real games are set).

In a few words, the way to do this was getting all the users playing on a given tournament, do the calculation, and then save it for future requests.

Considering that active users is now going from 600 to now 3000, I am anticipating a bottleneck here; processing time will definitely go up, so, it is time to *refactor*. What's the simplest solution here? Maybe multithreading processing. Let's try that, and see... I leave you with these lines of code!

Before, the method

Enjoy!

Alright, Comunidad Prode was not an exception. During the last couple of weeks, I saw a lot (I mean a lot) of new people signing up, creating user groups to have their own private tournaments.

In numbers, we had 2000 users in the system, but only 600 were active (by active I mean actually playing predictions for the last AFA season). During the last weeks, number of signups raised to 5000 users, and around 2800 active.

Also, while we used to have between 300 and 400 visits per day, we are now going over 1000:

|

| Images from Quicklytics, awesome app from my bud Eduardo Scoz |

At the same time, Fernando Carolei, a journalist who covers technology and new tendencies in public television channels in Argentina, was promoting the site as well in some of his "live" sections. After that, we had exchanged a few twitter direct messages, and he was telling me that he was using Comunidad Prode for a while, and found it actually pretty good :).

Anyway, as most of my blog posts, tech/programming stuff are present, and here is what I have today before wrapping up.

As iterative software development promotes, you might have heard to not overdesing; for a given problem and context, solve it in the "smartly-cheapest" way. In eXtremme Programming, this means DTSTTCPW: do the simplest thing that could possibly work.

That said, in the first version of Comunidad Prode, the way to handle user rankings was to calculate, on the fly, the points for a given user coming into the site. When the number of people started to grow, I started to see some heavy processing time. Long story short, rather than calculate points "dynamicaly", I moved it as part of the publishing results process (this is when the results of real games are set).

In a few words, the way to do this was getting all the users playing on a given tournament, do the calculation, and then save it for future requests.

Considering that active users is now going from 600 to now 3000, I am anticipating a bottleneck here; processing time will definitely go up, so, it is time to *refactor*. What's the simplest solution here? Maybe multithreading processing. Let's try that, and see... I leave you with these lines of code!

page_size = ENV['PUBLISH_PAGE_SIZE'] || 500

users = week.tournament.users

pages = (users.count / page_size) + 1

threads = (1..pages).map do |i|

Thread.new(i) do |i|

update_ranking_for week, users.shift(page_size)

end

end

threads.each {|t| t.join}

Before, the method

update_ranking_for was just taking the week as a param, and the users to update was getting figured out in the inside. Now, a group of users is passed into, and processing will happen in parallel. Technically speaking, if 600 users were taking a minute long to get processed, if we do it now in three groups of 200, time will go down to 20 seconds (1/3 of a minute).Enjoy!

Saturday, May 3, 2014

Comunidad Prode keep on growing

Exactly 2 years ago, first commit to the git repo was made

Today, I am releasing code base 3.0, which in terms of end users, means support for not only "league-style" tournaments, but also "cup-style". The reason is quite straightforward: the ability to play predictions for the FIFA World Cup 2014, given the large group of users who asked for this feature.

Since this is a programming blog, lets go to the point. From the domain perspective, even though this is arguably, there were differences on how to represent a match as it was, versus a world cup match (knockout match from now on). Even though the match has a date and time to be played, we never wanted to tie that to the match just because AFA schedule is a real mess, with kickoff times changing from one day to another. That said, the decision was for the week to have a date (one week has many matches), and past that date, the related match results can no longer be predicted.

The situation for the knockout matches was different, we did want to predict on a match by match basis, and we needed to group them by an identifier (group A, group B, semi-final, etc).

Now, when a user predicts a match, that gets persisted as a user_match. The relation between both is that each user match belongs to match, but now, it can also belong to a knockout match. How to achieve this, at least decently? I had the opportunity to use active record polymorphic associations a year ago or so, and I saw a perfect fit here for that as well. Here is the outcome.

so now, by calling user_match.related_match you will get the associated "real" match, no matter if it is a match or a knockout match, for instance, to get the real score and calculate the user prediction points.

The rest of the changes are just around that, and those who don't, are the usual gem version upgrades to stay up to date, for instance, bump rails from 4.0.4 to 4.1.0, as well as ruby from 2.0.0 to 2.1.1.

Today, I am releasing code base 3.0, which in terms of end users, means support for not only "league-style" tournaments, but also "cup-style". The reason is quite straightforward: the ability to play predictions for the FIFA World Cup 2014, given the large group of users who asked for this feature.

Since this is a programming blog, lets go to the point. From the domain perspective, even though this is arguably, there were differences on how to represent a match as it was, versus a world cup match (knockout match from now on). Even though the match has a date and time to be played, we never wanted to tie that to the match just because AFA schedule is a real mess, with kickoff times changing from one day to another. That said, the decision was for the week to have a date (one week has many matches), and past that date, the related match results can no longer be predicted.

The situation for the knockout matches was different, we did want to predict on a match by match basis, and we needed to group them by an identifier (group A, group B, semi-final, etc).

Now, when a user predicts a match, that gets persisted as a user_match. The relation between both is that each user match belongs to match, but now, it can also belong to a knockout match. How to achieve this, at least decently? I had the opportunity to use active record polymorphic associations a year ago or so, and I saw a perfect fit here for that as well. Here is the outcome.

class Match < ActiveRecord::Base

has_many :user_matches, as: :related_match

...

end

class KnockoutMatch < ActiveRecord::Base

has_many :user_matches, as: :related_match

...

end

class UserMatch < ActiveRecord::Base

belongs_to :related_match, polymorphic: true # match or knockout_match

...

end

so now, by calling user_match.related_match you will get the associated "real" match, no matter if it is a match or a knockout match, for instance, to get the real score and calculate the user prediction points.

The rest of the changes are just around that, and those who don't, are the usual gem version upgrades to stay up to date, for instance, bump rails from 4.0.4 to 4.1.0, as well as ruby from 2.0.0 to 2.1.1.

Monday, February 3, 2014

How long does it take?

Here is the scenario, a user of your system (end-user, business, stakeholder, etc) finds a bug, which gets reported to you. You analyse it, you know how to fix it, you go right ahead and code it in; but, how long does it take for your user to actually see it implemented? Think of that bug as any code change; a real defect, a new feature, whatever; if it takes a few hours to get it coded, why does the user need to wait a week, or two, to actually see it? Oh!, I see, this is why:

- the developer needs to stop doing what he is doing, developing!

- build the code

- change a few configs

- make the deploy package

- submit a request to the deployment team

- call one of your contacts cause deployment team is not responding

- call again, luckily someone is available

- got an email “Deployment completed!”

- open the site to run a smoke test, 500s all over the place :o

- jump on a conference call, bla bla bla

- … (oh my God, I said not to delete that file)

- deployment finished

- let QA know to go ahead and test it

- QA is in the other side of the world, not gonna happen today

- QA finds something, another email, another day

- change the code, another email, another day

- done! staging looks good; what, staging? Yes, but don’t worry, move this to production will be straightforward (ha!)

- (more or less, I think I made my point clear)

Continuous Delivery (CD) has being around from the early days of the agilism (is that a word?) and extreme programming, and before that as well, if we dig into the lean manufacturing principles of the Toyota Production System. In these days, and with the popularity of DevOps and the cloud, CD has exploded and tons of options are available to overcome the aforementioned situation, technology-specific as well as technology-agnostic.

I was not planning on writing this much, I rather show what I got. The following is just one setup that I have for one specific project. If you see it with clinic eyes, you will tell areas of improvement, there are, but it fulfils the needs as is (push your decisions up to the point where you really need to make them, rather than “guessing” what’s gonna happen, as Martin Alaimo told me once).

Alright, this is my deployment pipeline, it is a visual representation of the health of the system and environments. The more boxes (jobs) you have, probably better, since if anyone turns red, you know exactly where to get your hands on.

This is running on a GUI-less Ubuntu server, Jenkins is the continuous integration server, and through the build pipeline plugin is how you get the graphical dashboard.

Lets break down each job to understand its responsibility.

- CEMS_CI

- Git is used as a SCM, so the Jenkins git plugin needs to be installed

- Poll the upstream git repository at night, which is alright in terms of a daily build, but not exactly what I would like, I tell you why. Jenkins exposes a REST webapi that you can leverage to request a given job to run. That said, it is fairly simple to configure a post-receive git hook to run a curl command, so with that, what you would accomplish is to have one build per developer’s commit (even more frequent feedback of the system health).In the case of this project, the git repo is an in-company instance of gitorious, so a development team has no access to manipulate the given hooks. Github does give you that possibility, which is neat, so if Github is your upstream repo, and your Jenkins is accessible through the internet, you could accomplish this.

- Pull the latest changes from the upstream git repository. This will look only after to the master branch, so any code not ready to be integrated needs to be on its own branch (refer to this great branching strategy).The job will run under the jenkins user, so you need to make sure that it is able to ssh connect to the repo, load everything needed on its PATH, rvm etc etc (not the scope of this post)

- Run a bash script to install new ruby gems and run common rake tasks. The last task, running your tests, should really be your QA. If all tests pass, you must be able to ship the software without fear.

#!/bin/bash bundle install bundle exec rake db:migrate bundle exec rake db:seed bundle exec rake db:test:prepare bundle exec rake ci:setup:rspecdoc spec - Publish rails stats and ruby code coverage results, which in terms of quality, you can set it up to make the build fail if it goes below a given % threshold

- Tag the upstream repo for traceability (read more about git tags)

- and finally, if all above steps are ok, automatically trigger the next job Deploy to Dev

- Deploy to Dev

- Clone the repo, push it to a bare local one, created to mimic the upstream one (given that one is within our control, we can manage the hooks at our own will)

#!/bin/bash -e echo "Preparing to deploy dev..." if [ -d "./tmp" ]; then echo "Removing old files" rm -rf "./tmp" fi mkdir tmp git clone git@[repo_url].git tmp cd tmp git remote add [remote] "/opt/git/[local_repo].git" echo "Pushing source code to dev..." git push [remote] master echo "Done!" - Now, on your post-receive hook of the local repo, run this (make sure it is executable first)

$ chmod +x hooks/post-receive# hooks/post-receive #!/bin/sh # Deploy Development environment echo "Sit back, your deployment is kicking off..." # Kill server kill -9 $(pidof ruby) APP_PATH="[my_app_path]" if [ -d "$APP_PATH" ]; then echo "Backing up old version..." ./backup_site.sh rm -rf $APP_PATH fi cd $APP_PATH unset GIT_DIR git pull origin master bundle install bundle exec rake db:migrate bundle exec rake db:seed # Start server once again on the background nohup rails server &> /dev/null & - and finally, if all above steps are ok, automatically trigger the next job Dev Readiness

- Dev Readiness

- Its responsibility is to ask the app if it is up and running by executing a curl command to a preconfigured route

#!/bin/bash curl -X GET 'http://localhost:[port]/health' -I - finally, its post-build action is to set to manual-trigger a deploy to the next environment (this is the only human interaction to get app on the next server, upgraded and running)

The Deploy to Stage job will pretty much do the same as Deploy to Dev. We can then add jobs like this one for any other environment we want to manage, with pretty much very low effort, repeatable, repeatable, repeatable.

Wrapping up, I don't want to leave without mentioning Nico Paez, who was the one who has shown me the Jenkins build pipeline for the first time, in one of the many agile events we connect in.

Saturday, July 27, 2013

Kicking off second year of Comunidad Prode

We are just a few days away of a new season of the Argentina professional soccer league, which in terms of Comunidad Prode, means the site second year of life!

During the off-season (and a little before too), I have tried to focus on upgrading the site, in order to bring end users always a better experience. That means, keep up with the latest and greatest versions of frameworks, gems, etc, as well as improving performance by running different set of profiling tools. Major list of upgrades are as follows...

- Upgrade rails from 3.2.x to 4.0.0, going through the beta and rc releases

- Migrate from ruby 1.9.3 to 2.0.0

- Along with that, update tons of gem dependencies!

- Perfomance enhacements

some more perf (not pert as just tweeted :P)… @ComunidadProde pic.twitter.com/2uALVHOoGt

— Fernando Di Bartolo (@fdibartolo) June 8, 2013some pert improvements that will come soon along with #rails4 @ComunidadProde pic.twitter.com/cokoaStr4S

— Fernando Di Bartolo (@fdibartolo) June 8, 2013 - Decoupling classes, increase code coverage, and much more...!

Sunday, July 21, 2013

Screencasting the RomanNumerals kata

In every aspect of life, it is known that practice something will make you better at it. Sportsman practice long hours before a given competition, musicians practice as well before performing on stage, but us, software people (developers, architects, etc), not always do the same thing.

Quoting Robert Martin (aka Uncle Bob) in his book The Clean Coder, "you should plan on working 60 hours per week. The first 40 are for your employer. The remaining 20 are for you [...] you should be reading, practicing, learning, and otherwise enhancing your career. [...] During that 20 hours you should be doing those things that reinforce that passion [towards software]. Those 20 hours should be fun!"

That said, I have decided to record and share this following kata, hoping that someone picks it up and get contagious, in a good way :).

Audio is in Spanish thou, bummer, I know.

Closing... If you haven't read The Clean Coder, and now you feel like doing it, I humbly suggest reading Clean Code first.

Subscribe to:

Posts (Atom)